March 17, 2025 | Tech News | Special Report

The prestigious NVIDIA GTC 2025 conference, taking place from today through March 21 in the heart of Silicon Valley, San Jose, California, has emerged as the focal point of the global AI industry’s attention. This year’s highly anticipated event has particularly electrified the technology sector with the grand unveiling of NVIDIA’s groundbreaking next-generation AI server chip, the B300 series, which promises to revolutionize the landscape of artificial intelligence computing.

B300 Achieves Remarkable 50% Performance Enhancement, Establishing Revolutionary Benchmarks in AI Processing Capabilities

The groundbreaking B300 series, NVIDIA’s latest technological marvel, demonstrates an impressive 50% performance enhancement compared to its predecessor, the B200, while introducing a revolutionary advancement in memory capacity, expanding from 192GB to an unprecedented 288GB. This significant leap forward has catalyzed increased adoption of SK Hynix’s cutting-edge High-Bandwidth Memory (HBM) technology, with industry analysts confirming that the entire 2025 HBM production capacity has been secured through advance commitments.

At the core of the B300 GPU lies the sophisticated 12-layer HBM3E memory architecture, delivering an exceptional 288GB of memory capacity per GPU alongside an impressive bandwidth of 8TB/s. In an official statement, NVIDIA emphasized, “This revolutionary memory expansion technology will fundamentally transform AI model training and inference capabilities, while achieving unprecedented cost efficiency improvements of up to three times in inference operations.”

Innovative ‘CoWoS’ Technology Sets New Standards in Integration Density Excellence

The sophisticated B300 series offers unprecedented flexibility through its dual configuration options: dual-die and single-die variants, each leveraging advanced CoWoS-L and CoWoS-S technologies respectively. The groundbreaking CoWoS (Chip on Wafer on Substrate) represents a revolutionary advancement in packaging technology, enabling optimal integration of memory and logic semiconductors on a sophisticated silicon-based interposer platform.

The advanced CoWoS-L technology achieves remarkable synergy with TSMC’s state-of-the-art info technology, successfully delivering both substantial cost reductions and significant performance enhancements. This innovative approach minimizes mounting area requirements while maximizing chip-to-chip connection speeds, resulting in exceptional advantages for high-performance computing (HPC) applications. Complementarily, the CoWoS-S variant maintains reliability through its proven traditional silicon interposer methodology.

Thermal Management Evolution: Transitioning from Conventional Air Cooling to Advanced Liquid Cooling Solutions

While the current generation B300 series employs traditional air cooling methods, NVIDIA’s visionary CEO Jensen Huang made a significant announcement regarding future cooling innovations, stating, “Our next-generation DGX systems will incorporate advanced liquid cooling technology.” This sophisticated liquid cooling approach utilizes specialized coolant solutions to achieve superior thermal management, creating an optimized operational environment for demanding high-performance AI workloads.

Industry experts are extensively exploring even more advanced thermal management solutions, including cutting-edge immersion cooling technologies, where entire server systems are submerged in specially engineered cooling solutions for maximum heat dissipation. However, the widespread implementation of this revolutionary approach necessitates comprehensive data center infrastructure modifications, which may extend the timeline for broad market adoption.

Revolutionary Advancements in Network Connectivity Solutions

Complementing the B300 series, NVIDIA has introduced enhanced Quantum networking solutions featuring comprehensive support for the latest PCI Express 6 standard, enabling unprecedented data transfer capabilities. The innovative ConnectX-6 InfiniBand smart adapter establishes new industry benchmarks with its exceptional low-latency performance and superior message processing rates, delivering remarkable connection speeds of up to 200Gb/s through dual-port configurations.

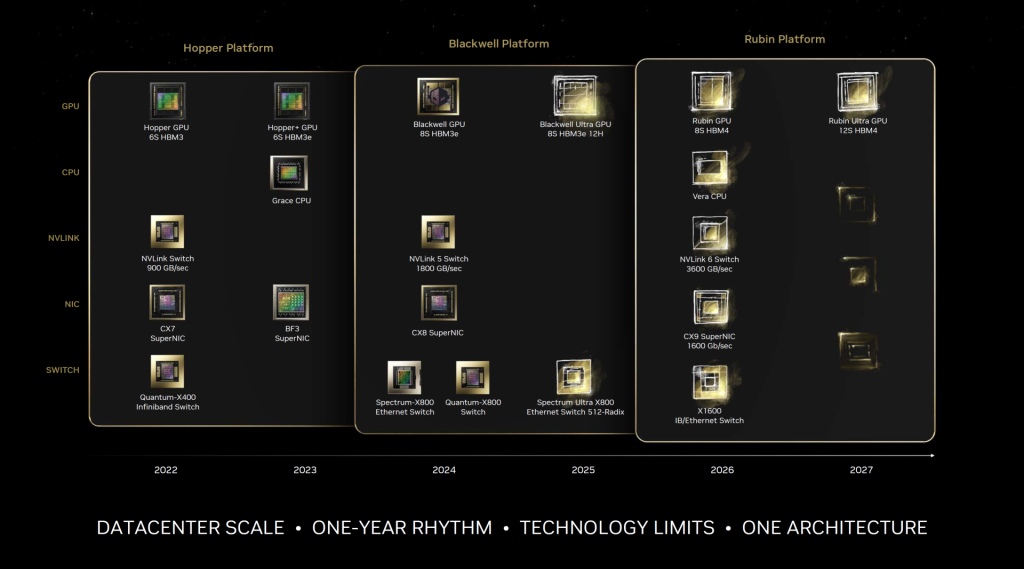

Strategic Innovation Roadmap: Development of the Next-Generation Vera Rubin Architecture

In its continuing pursuit of AI market leadership, NVIDIA is strategically expanding its comprehensive product portfolio beyond high-performance server GPUs to encompass professional workstations and accessible entry-level AI devices. The highly anticipated ‘Vera Rubin’ architecture, positioned as the successor to the current Blackwell generation of GPUs, is scheduled to commence initial production phases during the summer or fall of 2026.

The landmark GTC 2025 event stands as a testament to the rapid advancement of AI technology innovation and development, with the revolutionary B300 series positioned to catalyze unprecedented growth and transformation across the global AI industry landscape.