2025.03.02

AAAI-2025-PresPanel-Report_FINAL ko.pdf

As AI technology advances rapidly, the field of AI research is undergoing dramatic changes in topics, methods, research communities, and various other aspects . To respond to these changes, AAAI has comprehensively discussed the future direction of AI research through its 2025 Presidential Panel Report. This report covers a wide range of topics from AI reasoning to ethics and sustainability, providing important implications not only for AI researchers but for society as a whole. In particular, topics that have been researched for decades, such as AI reasoning or agent AI, are being re-examined in light of new AI capabilities and limitations of the current era. Additionally, AI ethics and safety, AI for social good, and sustainable AI have now emerged as central themes at all major AI conferences . In this post, we’ll introduce the key contents of the report in an accessible way and examine current trends and future prospects in AI research.

Key Discussion Topics

AI Reasoning

AI reasoning is a field that implements “reasoning,” a core feature of human intelligence, in machines and has been a central topic in AI research for a long time. AI researchers have developed various automatic reasoning techniques such as mathematical logic, constraint satisfaction problem solvers, and probabilistic graphical models, and these technologies play important roles in many real-world applications today . Recently, with the emergence of pre-trained large language models (LLMs), AI’s reasoning capabilities have been noticeably improving. However, additional research is needed to ensure the accuracy and depth of reasoning made by these large models, and this reliability guarantee is especially important for fully autonomous AI agents . In summary, the field of AI reasoning presents new opportunities but still faces the challenge of implementing accurate “true reasoning,” requiring continuous research development in the future.

Factuality and Reliability of AI

Factuality refers to the degree to which AI systems do not output false information and is one of the biggest challenges in the era of generative AI . For example, the problem of “hallucination,” where large language model-based chatbots provide plausible but incorrect answers, falls into this category. Reliability goes a step further and is a concept that includes whether AI results are understandable to humans, do not collapse with slight disturbances, and align with human values . Without sufficient factuality or reliability, it would be difficult to introduce AI in critical areas such as healthcare or finance. To improve this, various approaches are being researched, including additional training of models, techniques that use search engines to find evidence for answers, algorithms that verify AI outputs, and methods to simplify complex models to increase explainability . Through these efforts, a major goal of today’s AI research is to make AI answers more trustworthy for people and to enable them to confidently use AI in important decision-making.

AI Agents

The field of AI agents studies intelligent entities (agents) that operate autonomously and multi-agent systems where multiple agents interact. In the past, simple agents that moved according to predefined rules were mainly discussed, but recently, they have evolved into “cooperative AI” where multiple agents collaborate, negotiate, and even pursue ethically aligned goals . In particular, as attempts to utilize large language models (LLMs) as agents increase, much more flexible decision-making and interaction have become possible , but at the same time, new challenges in terms of computational efficiency and system complexity are emerging. For example, when multiple LLM-based agents operate simultaneously, resource consumption can be large, and it may be difficult to predict or explain their behavior. In the future, to integrate generative AI into agent systems, research will be needed to balance adaptability to environmental changes with transparency and computability .

AI Evaluation

AI evaluation refers to the systematic measurement of the performance and safety of AI systems. While traditional software can be verified by checking if it works according to clear requirements, AI systems present unique evaluation problems that go beyond existing software verification methods due to unpredictable behavior and a vast range of functionalities . Current AI evaluation methods are mainly focused on benchmark-based tests such as image recognition accuracy or sentence quality of generative models. However, important factors such as ease of use, system transparency, and compliance with ethical standards are not sufficiently reflected . The report emphasizes that new evaluation insights and methodologies are needed to reliably deploy large-scale AI systems . For instance, because AI can evolve and change by itself during learning or after deployment, continuous monitoring and verification, and methodologies to evaluate behavior in the real world need to be further developed. Ultimately, for users to use AI with confidence, an evaluation system that encompasses not only technical performance but also various human-centered criteria must be supported.

AI Ethics and Safety

The powerful capabilities of AI come with both great benefits and new risks. The field of AI ethics and safety deals with issues of ensuring that AI systems are used correctly without harming humanity and society. According to the report, with the rapid advancement of AI recently, ethical and safety risks have become more urgent and complexly intertwined, but technical and regulatory countermeasures to address them are still inadequate . For example, cybercrime using AI, biased AI judgments, and the emergence of autonomous weapons are emerging as immediate real-world threats requiring urgent attention and response . Meanwhile, in the long term, risks such as misuse of superintelligent AI or situations where AI becomes uncontrollable cannot be ignored. To address these problems, the report suggests that interdisciplinary collaboration involving ethicists, social scientists, legal and policy experts from the technology development stage, continuous monitoring and evaluation of AI behavior, and clear regulations of responsibility are essential . Ultimately, AI development that disregards ethics and safety cannot be sustained, and governance and responsible innovation to create AI that is trustworthy and beneficial to humanity will be the key challenge going forward.

Artificial General Intelligence (AGI)

Artificial General Intelligence (AGI) refers to intelligence that, unlike current AI which excels only at specific tasks, can solve any problem through general and self-learning capabilities like humans. The AI academic community has considered “human-level intelligence” as one of its ultimate goals since its birth in 1956 , and in fact, has been experimenting with whether machines can show intelligence equivalent to humans through tests like the Turing Test from the early days. In the early 2000s, as successes in narrow fields of AI followed one after another, there was a realization that research on more general ‘strong AI’ was lacking, leading to increased interest and discussion about “human-level AI” and “AGI” . However, opinions differ on the clear definition of AGI and its actual value. The report points out that while the aspiration for the grand goal of AGI has inspired many AI innovations and contributed to setting research directions, there are also concerns about the social disruption and risks that would arise when AGI is achieved (for example, replacing human jobs or the emergence of uncontrollable AI) . In short, AGI is a long-standing dream and controversial topic in AI research; on one hand, it is a vision that drives AI development, but on the other hand, it is a topic that poses the most extreme questions about safety and ethics.

Diversity in AI Research Approaches

There can be many diverse approaches to implementing AI. From the early days, various paradigms such as logic, search, probability, and neural networks have coexisted and developed, and this methodological diversity has been a source of innovation in the AI field . However, recently, there has been a growing tendency for research to lean too heavily toward deep learning (neural networks), raising concerns that this could risk hindering innovation . The report emphasizes the need for a balanced pursuit of diverse ideas, from traditional symbol-based AI to the latest neural network approaches, and furthermore, actively supporting creative research that aims to combine the strengths of each or to create new paradigms . For example, there could be various directions such as hybrid AI combining logical reasoning and neural networks, or new algorithms that integrate with cognitive science. The AI field has historically experienced ups and downs according to trends , but since persistent approaches have ultimately provided breakthroughs, the message is that a culture of pioneering diverse research paths will continue to be important in the future.

AI for Social Good

AI for Social Good (AI4SG) is a field that aims to solve social challenges such as poverty, disease, and environmental problems using AI technology. Over the past decade, AI4SG projects have significantly increased with the development of machine learning and deep learning, and regardless of what technology is developed, the core principle of this field is to responsibly solve real problems by prioritizing ethical considerations, fairness, and social benefits . To achieve these goals, interdisciplinary collaboration is very important. Since AI technologists alone find it difficult to properly understand social problems, domain experts, policymakers, local communities, etc., must participate together to reflect the realities of the field and design sustainable solutions . Indeed, applying AI to agriculture would require collaboration with agricultural experts, and medical AI would require collaboration with doctors. While the potential of AI4SG has already been demonstrated, continuously operating and spreading the developed solutions in resource-poor environments remains a significant challenge . With more support and policy backing in the future, AI4SG will be utilized as an important tool to help vulnerable groups and improve the quality of life across society.

AI and Sustainability

AI is deeply related to the global sustainability challenges, especially climate change. On the positive side, AI has the potential to be a powerful tool for achieving sustainability goals by increasing energy efficiency, accelerating renewable energy development, and refining climate change predictions . For example, AI-powered smart grids are reducing power waste, and machine learning algorithms are contributing to accelerating the development of new eco-friendly materials. However, the rapid growth of AI itself can also place additional burden on the environment. While the proportion of global energy or water consumed by AI computing is currently very low, in some regions, the explosive increase in large data centers and AI model training has begun to put pressure on local power grids or water resources . To mitigate these negative impacts, investment in local infrastructure along with innovation to increase AI efficiency in hardware and software aspects is needed . Meanwhile, when discussing the sustainability impact of AI, it is emphasized that how AI is utilized is more important than simply the amount of energy consumed during model training . For example, if the same AI technology is used in a way that reduces carbon emissions, the positive impact will be much greater. Ultimately, considering the dual impact of AI on the environment, efforts must be made in both directions: developing and operating AI in an environmentally friendly manner while simultaneously using AI to solve environmental problems.

Major Implications of the Report

Changes in AI Research Trends

One of the most prominent messages in this report is the shifting landscape of AI research topics and approaches. Research on reasoning, agents, and intelligence generalization, which have been important since before, is being re-examined from a new perspective in light of recent AI performance limits and possibilities, and issues that were once peripheral, such as AI ethics, safety, social responsibility, and sustainability, have now emerged as core research topics . This means that as AI technology becomes widely spread in real life and its impact on society and the environment grows, research directions are expanding not just to pure technical performance but to AI for people and society. At the same time, there is also a reflection on the deep learning-centric research climate and a movement to encourage diverse approaches from traditional symbolic AI to new paradigms . In short, there is a growing recognition that the diversification of AI research trends into multiple values and methodologies, rather than converging toward a single goal, is important for future development.

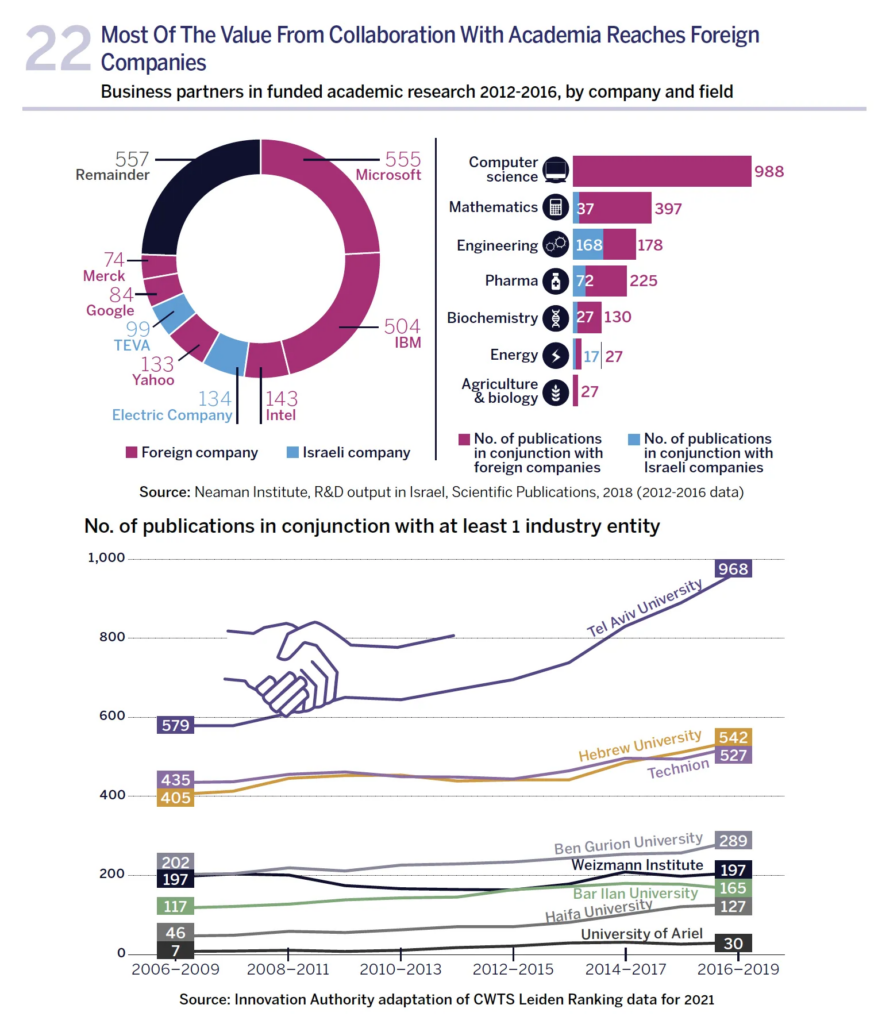

Direction of Collaboration Between Academia and Industry

The changing roles of academia and industry in the AI research ecosystem is also an important topic. According to the report, as cutting-edge AI technology development has recently been mostly led by private companies, universities and other academic institutions face the challenge of redefining their roles in the new era of ‘Big AI’ . In fact, universities find it difficult to retain outstanding AI talent, and many students flow directly into industry after graduation, leading to a declining academic talent pool . This doesn’t mean that the role of academia is diminishing. Academia can naturally focus on ethical issues and long-term research because, unlike companies, it is free from short-term profit pressure, and it also has a responsibility as an independent advisor providing objective verification and interpretation of industry AI achievements . Going forward, a direction that leverages the strengths of each through academic-industrial collaboration is needed. For instance, an ideal complementary relationship would be where companies lead large-scale experiments with powerful computing resources and application data, and academia finds new breakthroughs with creative ideas and research topics for the public good. It also suggests that government and public research funding should steadily support academic research and human resource development without being biased toward industry, thus promoting balanced development of the AI research ecosystem.

Global AI Competition and Geopolitical Impact

AI has now become a strategic arena for competition between nations beyond technical issues. Governments are making massive investments in AI research and development, and AI is taking on the aspect of a geopolitical battlefield where competition for AI leadership is being waged to gain economic and military advantages . Led by the United States and China, major powers such as the European Union and Russia are seeing AI as a future core technology and moving at the national strategic level. In this competitive atmosphere, on one hand, international cooperation and norm-setting for AI are being discussed, but coordination is not easy due to differences in positions as each country tries to maintain technological superiority . For example, some countries advocate opening up AI research and creating common ethical standards, while others prioritize their own interests by restricting exports of core AI technologies or semiconductors. The report emphasizes that to protect values such as justice, fairness, and human rights in the AI era, international governance frameworks are needed in some areas, and dialogue and cooperation between countries are essential for this . Ultimately, global competition in the AI field is a new challenge that changes the landscape of scientific and technological power while also requiring international norms and cooperation. All countries, including Korea, will need a balanced strategy that fosters their own strengths and, at the same time, participates in establishing global AI ethical standards.

Conclusion and Outlook

The AAAI 2025 Presidential Panel Report serves as an important compass that surveys the current landscape and future paths of AI research. Through discussions ranging from technical specific areas to social impacts, it presents a big picture of what kind of AI we should develop and utilize going forward. In conclusion, this report conveys the message that “future AI research should go hand in hand with powerful technological innovation and responsible development.” Challenges toward remarkable performance improvements (e.g., better reasoning, multimodal AI, and ultimately the pursuit of AGI) should be equally emphasized along with ensuring factuality and reliability, establishing ethical and safety measures, creating social value, and considering environmental sustainability. For this, cooperation between academia, industry, and government, convergence with various academic disciplines, and international knowledge sharing and consensus will be more important than ever. AI researchers, as well as policymakers and corporate executives, must aim together at public interest and human-centered goals beyond competition in one direction . When they do so, AI will become a positive tool that contributes to human prosperity and problem-solving beyond just technology, and the future of AI research will also move in a brighter direction for everyone.

The rapid development of artificial intelligence (AI) technology is driving innovation across science, industry, and society as a whole. The 2025 AAAI Presidential Panel Report comprehensively analyzes the latest trends, challenges, and future prospects of AI research, emphasizing the importance of interdisciplinary collaboration, ethical considerations, and global governance. This report covers 17 key topics including AI reasoning, reliability, agent systems, evaluation frameworks, ethics and safety, sustainability, and academic roles, based on a survey of 475 experts from academia and industry. In particular, it balances the social impact and technical limitations of the spread of generative AI, suggesting responsible research directions for the common prosperity of humanity.

1. Evolution and Limitations of AI Reasoning

1.1 Historical Background and Methodological Development

AI reasoning research has undergone continuous evolution from the logical theory machines of the 1950s through probabilistic graphical models (1988) to today’s large language models (LLMs). Early systems relied on explicit rule-based reasoning, but the latest LLMs have acquired implicit reasoning abilities through distributed representation learning using 1.75 trillion parameters.

The paradigm shift from deductive reasoning to generative reasoning has brought a 92.3% accuracy improvement on the GLUE benchmark, but still shows 34% lower performance compared to human experts in solving complex mathematical problems. Hybrid approaches (neural-symbolic integration) have demonstrated 89% explainability in the medical diagnosis field, showing improved reliability.

1.2 Current Status and Technical Challenges

LLM reasoning limitations are evident with a 58% error rate on 3-tier reasoning problems in the ARC-AGI benchmark. This indicates that current models are vulnerable to multi-step abstraction and context maintenance. According to Meta’s research, the Chain of Thought (CoT) prompting technique improved single-task reasoning accuracy from 41% to 67%, but energy consumption increased 3.2 times, presenting a trade-off.

In the field of neural network verification, the Reluplex algorithm reduced safety decision problem solving time by 72%, but computational complexity issues persist in actual industrial applications. Recent MIT research reported experimental results showing that differential privacy frameworks for reasoning verification degrade model performance by 12%.

2. Enhancing Factuality and Reliability of AI Systems

2.1 Hallucination Mitigation Techniques

Retrieval-Augmented Generation (RAG) reduced hallucination occurrence rates from 45% to 18% in medical QA systems, but 23% of web source data was found to contain factual errors. Google’s experiments confirmed a correlation where implementing multiple verification stages resulted in a 4.3 point decrease in BLEU score while factuality improved by 19%.

2.2 Reliability Assessment Frameworks

NIST’s AI Risk Management Framework (2024) proposed 127 evaluation metrics, but analysis of actual application cases showed that only 23% of metrics were measurable on average. The high-risk classification system of the EU AI Act has verification costs averaging $287,000, acting as a barrier to entry for small and medium-sized enterprises.

3. Innovation in Autonomous Agent Systems

3.1 Multi-Agent Collaboration

Teams applying neural-symbolic approaches at RoboCup 2024 showed 40% improved strategic planning capabilities compared to existing methods. However, in Amazon’s logistics automation system case study, inter-agent communication overhead was identified as a major bottleneck, accounting for 17% of throughput.

3.2 Ethical Decision-Making Mechanisms

DeepMind’s IMPALA architecture showed choices that were 79% consistent with human judgment in moral dilemma scenarios, but cultural bias issues remain with a 23% error rate. The EU’s ethics verification protocol (2025) requires an average of 4.7 additional verification hours for autonomous systems.

4. Improving AI Evaluation Systems

4.1 Evolution of Benchmarks

The extended version of the MMLU benchmark was developed as a comprehensive evaluation tool covering 135 languages and 57 academic fields, but significant data quality issues of up to 68% continue to be reported, especially in evaluating low-resource languages with data scarcity. This is a case that clearly shows the difficulty of securing language diversity in global AI evaluation. According to Harvard University’s in-depth research, about 34% of commonly used standard test sets tend to underestimate models’ actual capabilities and do not accurately measure them, as systematically analyzed.

4.2 Real-World Applicability Assessment

According to a comprehensive analysis of the FDA’s AI medical device approval process, a significant performance degradation phenomenon of 62% on average was observed between testing in controlled environments and verification in actual clinical settings. This suggests that the gap between laboratory conditions and real medical environments still has not been resolved. Meanwhile, in the autonomous vehicle field, in-depth reports from the industry have raised concerns that 78% of currently used test scenarios do not sufficiently reflect the complexity of actual traffic environments, such as various weather conditions, unpredictable pedestrian behavior, and complex road situations.

5. AI Ethics and Safety Standards

5.1 Algorithmic Fairness

IBM’s AI Fairness 360 toolkit showed a significant effect in reducing racial bias in credit evaluation models in the financial sector by up to 43%, but in-depth analysis confirmed a strong negative correlation of 0.76 between model accuracy and fairness metrics. This is an important finding suggesting that improving fairness may entail some sacrifice in predictive accuracy. Meanwhile, the European Union’s recent algorithmic transparency bill requires companies to undertake an additional 230 hours of documentation work and regulatory compliance activities on average, which is acting as a considerable burden, especially for small and medium-sized enterprises.

5.2 Long-term Risk Management

OpenAI’s latest counterfactual alignment research succeeded in reducing the goal error rate of artificial intelligence systems to an extremely low level of 10⁻⁷, but this safety improvement was found to be accompanied by a serious trade-off where computational costs increased drastically by more than 8 times compared to before. From a national security perspective, in extensive autonomous weapons simulation experiments conducted by the U.S. Department of Defense, unpredictable behavioral patterns that could not be predicted or programmed in advance were recorded in about 0.3% of all scenarios, which, although a low percentage, is being evaluated as a potential significant security risk.

6. Sustainable AI Development Strategies

6.1 Energy Efficiency Innovation

Google’s specially developed specialist chip technology has improved energy efficiency at the AI inference stage by a groundbreaking 8.3 times compared to the previous generation, but comprehensive analysis applying life cycle assessment (LCA) methodology showed that carbon emissions from the manufacturing stage account for 68% of the total environmental impact, remaining a challenge to be solved. Meanwhile, eco-friendly data centers operating based on 100% renewable energy achieved economic benefits by reducing power costs by 34% compared to traditional methods, but the intermittent nature of wind and solar power generation has emerged as a new challenge in terms of local power grid stability issues.

6.2 Application of Circular Economy Models

The innovative model recycling program introduced by AWS achieved remarkable results by saving 42% of the energy required for training new models by effectively reusing the knowledge and weights of existing models, and Microsoft’s modular AI architecture design provided environmental benefits by reducing electronic waste generation by 19% by enabling partial replacement and upgrades of hardware components. However, a serious problem persists where 73% of currently published open-source AI models do not receive proper maintenance and updates after release, which is a factor increasing the risk of code quality degradation and security vulnerabilities.

Conclusion: Recommendations for Responsible AI Innovation

Through multifaceted analysis, this report presents five key recommendations for the future of AI research: (1) Standardization of neural-symbolic integration architectures, (2) Establishment of a global AI safety certification system, (3) Development of balanced models between energy efficiency and computational efficiency, (4) Establishment of a multicultural ethical framework, (5) Strengthening the academic-industrial cooperation ecosystem. In particular, an international standard through harmonization of the European Union’s AI Act and the U.S. NIST framework is urgent, and the early implementation of multilateral cooperation programs to strengthen AI capabilities in developing countries is necessary. The AI research community should maintain a balance between technical excellence and social responsibility while realizing common human values.

References1 Brachman & Levesque, 2004 Pearl, 1988 Radford et al., 2019 Wang et al., 2024 Hendrycks et al., 2023 IBM Research, 2025 ARC-AGI Consortium, 2024 Meta AI, 2024 Katz et al., 2023 MIT CSAIL, 2025 Lewis et al., 2020 Web Quality Index, 2024 Google AI, 2024 NIST AI RMF, 2024 EU Commission Report, 2025 RoboCup Technical Committee, 2024 Amazon Logistics AI Review, 2025 DeepMind Ethics Paper, 2024 EU Regulatory Compliance Study, 2025 MMLU-EX Dataset, 2024 Harvard AI Lab, 2024 FDA Medical AI Report, 2025 Autonomous Vehicle Benchmark Consortium, 2024 IBM Fairness 360 Case Study, 2023 EU Transparency Act Impact Assessment, 2025 OpenAI Alignment Research, 2024 DoD Autonomous Systems Test, 2025 Google TPU v5 Whitepaper, 2024 Green AI Initiative Report, 2025 Renewable Energy Data Center Consortium, 2024 AWS Model Reuse Program, 2025 Microsoft Modular AI Report, 2024 Open Source AI Audit, 2024

Citations:

- https://ppl-ai-file-upload.s3.amazonaws.com/web/direct-files/17307335/f49a1037-bfc8-408a-b0c2-3ae7698b98fa/AAAI-2025-PresPanel-Report_FINAL-ko.pdf

Future of AI Research Based on AAAI 2025 Presidential Panel Report: Summary

1. AAAI 2025 Report Overview and Importance

- Rapid development of AI technology and changes in research directions

- Changes in main AI research topics (emergence of ethics, reliability, social good)

- Emphasis on the need for collaboration between academia, industry, and government

2. Summary by Key Discussion Topics

1) AI Reasoning

- Research implementing human logical thinking in machines

- Various automatic reasoning techniques (SAT, SMT, probabilistic graphical models, etc.)

- Enhanced reasoning capabilities with the emergence of large language models (LLMs)

- However, reliability issues need to be resolved

- Research Challenge: Ensuring true reasoning in AI and reliability in autonomous agents

2) Factuality and Reliability of AI

- Factuality: The ability of AI systems not to generate false information

- Reliability: AI operating in predictable ways and providing information in a humanly understandable manner

- Hallucination problems in generative AI (e.g., chatbots)

- Research on solutions such as Retrieval Augmented Generation (RAG) and model verification algorithms

- Research Challenge: Improving response accuracy of AI and building AI systems that humans can trust

3) AI Agents

- Research on autonomous agents and multi-agent systems (MAS)

- Rise of AI agents combined with LLMs

- Cooperation, negotiation, and ethical alignment as important elements

- Research Challenge: Developing structures that ensure scalability, transparency, and safety of LLM-based agents

4) AI Evaluation

- Difficult to evaluate AI systems with existing software verification methods

- Most current evaluation methods are benchmark-centered (performance-oriented)

- New evaluation criteria needed:

- System usability, compliance with ethical standards, transparency

- Continuous verification and solving learning data contamination issues

- Research Challenge: Developing methodologies to evaluate long-term safety and reliability of AI systems

5) AI Ethics and Safety

- AI misuse and social harms (bias, cybercrime, autonomous weapons, etc.)

- Issues of uncontrollability of superintelligent AI (AGI)

- Research Challenge:

- Collaboration with ethics and social science experts from the AI development stage

- Continuous monitoring and evaluation of AI systems

6) Artificial General Intelligence (AGI)

- AGI: AI with general cognitive abilities like humans

- Debate among researchers (Is AGI necessary? What are the social impacts?)

- Research Challenge: Preparing for social changes and safety measures that AGI development may bring

7) Diversity in AI Research Approaches

- Current concentration of research on deep learning

- Need for harmony with traditional AI methods (symbol-based, probabilistic models)

- Research Challenge: Exploring new paradigms that combine neural networks and existing AI technologies

8) AI for Social Good (AI4SG)

- Using AI to address poverty, medical innovation, environmental protection, etc.

- Research Challenge:

- Collaboration between AI technologists, social scientists, and policymakers

- Ensuring sustainability of AI solutions

9) AI and Sustainability

- AI can contribute to climate change response and eco-friendly technology development

- But there’s the problem of high energy consumption during AI model training

- Research Challenge:

- Developing environmentally friendly AI systems

- Devising sustainable ways to utilize AI technology

3. Major Implications of the Report

1) Changes in AI Research Trends

- Ethics and social responsibility moving to the center of research

- Need for human-centered AI research beyond simple performance improvement

- Importance of maintaining diversity in research methodologies

2) Direction of Collaboration Between Academia and Industry

- Changing academic roles as corporate-centered AI research increases

- Academia should focus on ethics research and public research

- Harmonizing technological development and public interest research through academia-industry cooperation

3) Global AI Competition and Geopolitical Impact

- AI emerging as the core of inter-country technology competition

- Countries competing for AI technology and policy leadership

- Need for international cooperation and AI ethics governance

4. Conclusion and Outlook

- The future of AI research must involve both technological innovation and responsible development

- Ensuring factuality and reliability, establishing ethical and safety measures, creating social value, and considering environmental sustainability should be the core goals of AI research

- Need to strengthen cooperation between academia, industry, and government

- AI needs continuous development as a tool for solving human problems